Refactor high-complexity React components in Dify frontend. Use when `pnpm analyze-component...

npx skills add mvanhorn/last30days-skill

Or install specific skill: npx add-skill https://github.com/mvanhorn/last30days-skill

# Description

Research a topic from the last 30 days on Reddit + X + Web, become an expert, and write copy-paste-ready prompts for the user's target tool.

# SKILL.md

name: last30days

description: Research a topic from the last 30 days on Reddit + X + Web, become an expert, and write copy-paste-ready prompts for the user's target tool.

argument-hint: "[topic] for [tool]" or "[topic]"

context: fork

agent: Explore

disable-model-invocation: true

allowed-tools: Bash, Read, Write, AskUserQuestion, WebSearch

last30days: Research Any Topic from the Last 30 Days

Research ANY topic across Reddit, X, and the web. Surface what people are actually discussing, recommending, and debating right now.

Use cases:

- Prompting: "photorealistic people in Nano Banana Pro", "Midjourney prompts", "ChatGPT image generation" → learn techniques, get copy-paste prompts

- Recommendations: "best Claude Code skills", "top AI tools" → get a LIST of specific things people mention

- News: "what's happening with OpenAI", "latest AI announcements" → current events and updates

- General: any topic you're curious about → understand what the community is saying

CRITICAL: Parse User Intent

Before doing anything, parse the user's input for:

- TOPIC: What they want to learn about (e.g., "web app mockups", "Claude Code skills", "image generation")

- TARGET TOOL (if specified): Where they'll use the prompts (e.g., "Nano Banana Pro", "ChatGPT", "Midjourney")

- QUERY TYPE: What kind of research they want:

- PROMPTING - "X prompts", "prompting for X", "X best practices" → User wants to learn techniques and get copy-paste prompts

- RECOMMENDATIONS - "best X", "top X", "what X should I use", "recommended X" → User wants a LIST of specific things

- NEWS - "what's happening with X", "X news", "latest on X" → User wants current events/updates

- GENERAL - anything else → User wants broad understanding of the topic

Common patterns:

- [topic] for [tool] → "web mockups for Nano Banana Pro" → TOOL IS SPECIFIED

- [topic] prompts for [tool] → "UI design prompts for Midjourney" → TOOL IS SPECIFIED

- Just [topic] → "iOS design mockups" → TOOL NOT SPECIFIED, that's OK

- "best [topic]" or "top [topic]" → QUERY_TYPE = RECOMMENDATIONS

- "what are the best [topic]" → QUERY_TYPE = RECOMMENDATIONS

IMPORTANT: Do NOT ask about target tool before research.

- If tool is specified in the query, use it

- If tool is NOT specified, run research first, then ask AFTER showing results

Store these variables:

- TOPIC = [extracted topic]

- TARGET_TOOL = [extracted tool, or "unknown" if not specified]

- QUERY_TYPE = [RECOMMENDATIONS | NEWS | HOW-TO | GENERAL]

Setup Check

The skill works in three modes based on available API keys:

- Full Mode (both keys): Reddit + X + WebSearch - best results with engagement metrics

- Partial Mode (one key): Reddit-only or X-only + WebSearch

- Web-Only Mode (no keys): WebSearch only - still useful, but no engagement metrics

API keys are OPTIONAL. The skill will work without them using WebSearch fallback.

First-Time Setup (Optional but Recommended)

If the user wants to add API keys for better results:

mkdir -p ~/.config/last30days

cat > ~/.config/last30days/.env << 'ENVEOF'

# last30days API Configuration

# Both keys are optional - skill works with WebSearch fallback

# For Reddit research (uses OpenAI's web_search tool)

OPENAI_API_KEY=

# For X/Twitter research (uses xAI's x_search tool)

XAI_API_KEY=

ENVEOF

chmod 600 ~/.config/last30days/.env

echo "Config created at ~/.config/last30days/.env"

echo "Edit to add your API keys for enhanced research."

DO NOT stop if no keys are configured. Proceed with web-only mode.

Research Execution

IMPORTANT: The script handles API key detection automatically. Run it and check the output to determine mode.

Step 1: Run the research script

python3 ~/.claude/skills/last30days/scripts/last30days.py "$ARGUMENTS" --emit=compact 2>&1

The script will automatically:

- Detect available API keys

- Show a promo banner if keys are missing (this is intentional marketing)

- Run Reddit/X searches if keys exist

- Signal if WebSearch is needed

Step 2: Check the output mode

The script output will indicate the mode:

- "Mode: both" or "Mode: reddit-only" or "Mode: x-only": Script found results, WebSearch is supplementary

- "Mode: web-only": No API keys, Claude must do ALL research via WebSearch

Step 3: Do WebSearch

For ALL modes, do WebSearch to supplement (or provide all data in web-only mode).

Choose search queries based on QUERY_TYPE:

If RECOMMENDATIONS ("best X", "top X", "what X should I use"):

- Search for: best {TOPIC} recommendations

- Search for: {TOPIC} list examples

- Search for: most popular {TOPIC}

- Goal: Find SPECIFIC NAMES of things, not generic advice

If NEWS ("what's happening with X", "X news"):

- Search for: {TOPIC} news 2026

- Search for: {TOPIC} announcement update

- Goal: Find current events and recent developments

If PROMPTING ("X prompts", "prompting for X"):

- Search for: {TOPIC} prompts examples 2026

- Search for: {TOPIC} techniques tips

- Goal: Find prompting techniques and examples to create copy-paste prompts

If GENERAL (default):

- Search for: {TOPIC} 2026

- Search for: {TOPIC} discussion

- Goal: Find what people are actually saying

For ALL query types:

- USE THE USER'S EXACT TERMINOLOGY - don't substitute or add tech names based on your knowledge

- If user says "ChatGPT image prompting", search for "ChatGPT image prompting"

- Do NOT add "DALL-E", "GPT-4o", or other terms you think are related

- Your knowledge may be outdated - trust the user's terminology

- EXCLUDE reddit.com, x.com, twitter.com (covered by script)

- INCLUDE: blogs, tutorials, docs, news, GitHub repos

- DO NOT output "Sources:" list - this is noise, we'll show stats at the end

Step 3: Wait for background script to complete

Use TaskOutput to get the script results before proceeding to synthesis.

Depth options (passed through from user's command):

- --quick → Faster, fewer sources (8-12 each)

- (default) → Balanced (20-30 each)

- --deep → Comprehensive (50-70 Reddit, 40-60 X)

Judge Agent: Synthesize All Sources

After all searches complete, internally synthesize (don't display stats yet):

The Judge Agent must:

1. Weight Reddit/X sources HIGHER (they have engagement signals: upvotes, likes)

2. Weight WebSearch sources LOWER (no engagement data)

3. Identify patterns that appear across ALL three sources (strongest signals)

4. Note any contradictions between sources

5. Extract the top 3-5 actionable insights

Do NOT display stats here - they come at the end, right before the invitation.

FIRST: Internalize the Research

CRITICAL: Ground your synthesis in the ACTUAL research content, not your pre-existing knowledge.

Read the research output carefully. Pay attention to:

- Exact product/tool names mentioned (e.g., if research mentions "ClawdBot" or "@clawdbot", that's a DIFFERENT product than "Claude Code" - don't conflate them)

- Specific quotes and insights from the sources - use THESE, not generic knowledge

- What the sources actually say, not what you assume the topic is about

ANTI-PATTERN TO AVOID: If user asks about "clawdbot skills" and research returns ClawdBot content (self-hosted AI agent), do NOT synthesize this as "Claude Code skills" just because both involve "skills". Read what the research actually says.

If QUERY_TYPE = RECOMMENDATIONS

CRITICAL: Extract SPECIFIC NAMES, not generic patterns.

When user asks "best X" or "top X", they want a LIST of specific things:

- Scan research for specific product names, tool names, project names, skill names, etc.

- Count how many times each is mentioned

- Note which sources recommend each (Reddit thread, X post, blog)

- List them by popularity/mention count

BAD synthesis for "best Claude Code skills":

"Skills are powerful. Keep them under 500 lines. Use progressive disclosure."

GOOD synthesis for "best Claude Code skills":

"Most mentioned skills: /commit (5 mentions), remotion skill (4x), git-worktree (3x), /pr (3x). The Remotion announcement got 16K likes on X."

For all QUERY_TYPEs

Identify from the ACTUAL RESEARCH OUTPUT:

- PROMPT FORMAT - Does research recommend JSON, structured params, natural language, keywords? THIS IS CRITICAL.

- The top 3-5 patterns/techniques that appeared across multiple sources

- Specific keywords, structures, or approaches mentioned BY THE SOURCES

- Common pitfalls mentioned BY THE SOURCES

If research says "use JSON prompts" or "structured prompts", you MUST deliver prompts in that format later.

THEN: Show Summary + Invite Vision

CRITICAL: Do NOT output any "Sources:" lists. The final display should be clean.

Display in this EXACT sequence:

FIRST - What I learned (based on QUERY_TYPE):

If RECOMMENDATIONS - Show specific things mentioned:

🏆 Most mentioned:

1. [Specific name] - mentioned {n}x (r/sub, @handle, blog.com)

2. [Specific name] - mentioned {n}x (sources)

3. [Specific name] - mentioned {n}x (sources)

4. [Specific name] - mentioned {n}x (sources)

5. [Specific name] - mentioned {n}x (sources)

Notable mentions: [other specific things with 1-2 mentions]

If PROMPTING/NEWS/GENERAL - Show synthesis and patterns:

What I learned:

[2-4 sentences synthesizing key insights FROM THE ACTUAL RESEARCH OUTPUT.]

KEY PATTERNS I'll use:

1. [Pattern from research]

2. [Pattern from research]

3. [Pattern from research]

THEN - Stats (right before invitation):

For full/partial mode (has API keys):

---

✅ All agents reported back!

├─ 🟠 Reddit: {n} threads │ {sum} upvotes │ {sum} comments

├─ 🔵 X: {n} posts │ {sum} likes │ {sum} reposts

├─ 🌐 Web: {n} pages │ {domains}

└─ Top voices: r/{sub1}, r/{sub2} │ @{handle1}, @{handle2} │ {web_author} on {site}

For web-only mode (no API keys):

---

✅ Research complete!

├─ 🌐 Web: {n} pages │ {domains}

└─ Top sources: {author1} on {site1}, {author2} on {site2}

💡 Want engagement metrics? Add API keys to ~/.config/last30days/.env

- OPENAI_API_KEY → Reddit (real upvotes & comments)

- XAI_API_KEY → X/Twitter (real likes & reposts)

LAST - Invitation:

---

Share your vision for what you want to create and I'll write a thoughtful prompt you can copy-paste directly into {TARGET_TOOL}.

Use real numbers from the research output. The patterns should be actual insights from the research, not generic advice.

SELF-CHECK before displaying: Re-read your "What I learned" section. Does it match what the research ACTUALLY says? If the research was about ClawdBot (a self-hosted AI agent), your summary should be about ClawdBot, not Claude Code. If you catch yourself projecting your own knowledge instead of the research, rewrite it.

IF TARGET_TOOL is still unknown after showing results, ask NOW (not before research):

What tool will you use these prompts with?

Options:

1. [Most relevant tool based on research - e.g., if research mentioned Figma/Sketch, offer those]

2. Nano Banana Pro (image generation)

3. ChatGPT / Claude (text/code)

4. Other (tell me)

IMPORTANT: After displaying this, WAIT for the user to respond. Don't dump generic prompts.

WAIT FOR USER'S VISION

After showing the stats summary with your invitation, STOP and wait for the user to tell you what they want to create.

When they respond with their vision (e.g., "I want a landing page mockup for my SaaS app"), THEN write a single, thoughtful, tailored prompt.

WHEN USER SHARES THEIR VISION: Write ONE Perfect Prompt

Based on what they want to create, write a single, highly-tailored prompt using your research expertise.

CRITICAL: Match the FORMAT the research recommends

If research says to use a specific prompt FORMAT, YOU MUST USE THAT FORMAT:

- Research says "JSON prompts" → Write the prompt AS JSON

- Research says "structured parameters" → Use structured key: value format

- Research says "natural language" → Use conversational prose

- Research says "keyword lists" → Use comma-separated keywords

ANTI-PATTERN: Research says "use JSON prompts with device specs" but you write plain prose. This defeats the entire purpose of the research.

Output Format:

Here's your prompt for {TARGET_TOOL}:

---

[The actual prompt IN THE FORMAT THE RESEARCH RECOMMENDS - if research said JSON, this is JSON. If research said natural language, this is prose. Match what works.]

---

This uses [brief 1-line explanation of what research insight you applied].

Quality Checklist:

- [ ] FORMAT MATCHES RESEARCH - If research said JSON/structured/etc, prompt IS that format

- [ ] Directly addresses what the user said they want to create

- [ ] Uses specific patterns/keywords discovered in research

- [ ] Ready to paste with zero edits (or minimal [PLACEHOLDERS] clearly marked)

- [ ] Appropriate length and style for TARGET_TOOL

IF USER ASKS FOR MORE OPTIONS

Only if they ask for alternatives or more prompts, provide 2-3 variations. Don't dump a prompt pack unless requested.

AFTER EACH PROMPT: Stay in Expert Mode

After delivering a prompt, offer to write more:

Want another prompt? Just tell me what you're creating next.

CONTEXT MEMORY

For the rest of this conversation, remember:

- TOPIC: {topic}

- TARGET_TOOL: {tool}

- KEY PATTERNS: {list the top 3-5 patterns you learned}

- RESEARCH FINDINGS: The key facts and insights from the research

CRITICAL: After research is complete, you are now an EXPERT on this topic.

When the user asks follow-up questions:

- DO NOT run new WebSearches - you already have the research

- Answer from what you learned - cite the Reddit threads, X posts, and web sources

- If they ask for a prompt - write one using your expertise

- If they ask a question - answer it from your research findings

Only do new research if the user explicitly asks about a DIFFERENT topic.

Output Summary Footer (After Each Prompt)

After delivering a prompt, end with:

For full/partial mode:

---

📚 Expert in: {TOPIC} for {TARGET_TOOL}

📊 Based on: {n} Reddit threads ({sum} upvotes) + {n} X posts ({sum} likes) + {n} web pages

Want another prompt? Just tell me what you're creating next.

For web-only mode:

---

📚 Expert in: {TOPIC} for {TARGET_TOOL}

📊 Based on: {n} web pages from {domains}

Want another prompt? Just tell me what you're creating next.

💡 Unlock Reddit & X data: Add API keys to ~/.config/last30days/.env

# README.md

/last30days

The AI world reinvents itself every month. This Claude Code skill keeps you current. /last30days researches your topic across Reddit, X, and the web from the last 30 days, finds what the community is actually upvoting and sharing, and writes you a prompt that works today, not six months ago. Whether it's Ralph Wiggum loops, Suno music prompts, or the latest Midjourney techniques, you'll prompt like someone who's been paying attention.

Best for prompt research: discover what prompting techniques actually work for any tool (ChatGPT, Midjourney, Claude, Figma AI, etc.) by learning from real community discussions and best practices.

But also great for anything trending: music, culture, news, product recommendations, viral trends, or any question where "what are people saying right now?" matters.

Installation

# Clone the repo

git clone https://github.com/mvanhorn/last30days-skill.git ~/.claude/skills/last30days

# Add your API keys

mkdir -p ~/.config/last30days

cat > ~/.config/last30days/.env << 'EOF'

OPENAI_API_KEY=sk-...

XAI_API_KEY=xai-...

EOF

chmod 600 ~/.config/last30days/.env

Usage

/last30days [topic]

/last30days [topic] for [tool]

Examples:

- /last30days prompting techniques for ChatGPT for legal questions

- /last30days iOS app mockups for Nano Banana Pro

- /last30days What are the best rap songs lately

- /last30days remotion animations for Claude Code

What It Does

- Researches - Scans Reddit and X for discussions from the last 30 days

- Synthesizes - Identifies patterns, best practices, and what actually works

- Delivers - Either writes copy-paste-ready prompts for your target tool, or gives you a curated expert-level answer

Use it for:

- Prompt research - "What prompting techniques work for legal questions in ChatGPT?"

- Tool best practices - "How are people using Remotion with Claude Code?"

- Trend discovery - "What are the best rap songs right now?"

- Product research - "What do people think of the new M4 MacBook?"

- Viral content - "What's the dog-as-human trend on ChatGPT?"

Example: Legal Prompting (Hallucination Prevention)

Query: /last30days prompting techniques for chatgpt for legal questions

Research Output:

The dominant theme is hallucination prevention - multiple sources discuss lawyers being fined or embarrassed for submitting fake case citations that ChatGPT fabricated. Key prompting strategies include: (1) using Deep Research mode or grounding tools with uploaded primary sources, (2) building "Hallucination Prevention Systems" into prompts that force epistemic honesty, (3) prompting for procedural questions rather than case law lookups, and (4) framing outputs as "issue-spotting" rather than legal advice. A major warning: chat logs are discoverable in litigation.

Key patterns discovered:

1. Hallucination prevention clauses - "If you don't know a case citation, say so rather than inventing one"

2. Role assignment - assign ChatGPT a specific persona (paralegal, issue-spotter) to constrain outputs

3. Structured output requirements - request specific sections (risks, key terms, questions for counsel)

4. Epistemic humility enforcement - "acknowledge uncertainty" and "flag when you're unsure"

5. Scope limitation - focus on procedural questions, not case law research

Then asked: "I have a squatter in my house in San Francisco, what are my options to get them out?"

Generated Prompt:

Role: You are a legal research assistant helping a property owner understand

their options. You are NOT providing legal advice - you are helping me

identify issues, understand procedures, and prepare informed questions for an

attorney.

Situation: I own a residential property in San Francisco, California. Someone

is currently occupying the property without my permission or any lease

agreement (a squatter). I need to understand my legal options for removing them.

Your task: Provide a structured analysis covering:

1. California/SF Legal Framework - What laws govern this situation? (Note: San

Francisco has strong tenant protections - address how these apply or don't

apply to squatters vs. tenants)

2. Key Distinctions - Help me understand: squatter vs. trespasser vs. holdover

tenant. How does the length of occupation affect my options?

3. Available Legal Remedies - What are my procedural options? (unlawful

detainer, police involvement, etc.) What is the typical process and timeline?

4. Critical Mistakes to Avoid - What actions could expose me to liability?

(self-help eviction, utility shutoffs, etc.)

5. Questions I Should Ask an Attorney - Based on this analysis, what specific

questions should I bring to a California real estate attorney?

Important constraints:

- Do NOT cite specific case names unless you are 100% certain they exist - say

"case law generally supports..." instead

- Flag any areas where you're uncertain

- Note where San Francisco local ordinances may differ from California state law

- This is for informational purposes to prepare for a legal consultation

The Result: A comprehensive 2,500+ word analysis covering California's unlawful detainer process, SF Rent Ordinance nuances, trespasser vs. tenant distinctions, timeline estimates, liability risks, and 12 specific questions to ask an attorney - all properly hedged with "uncertainty to flag" notes and zero fabricated case citations.

This example shows /last30days learning domain-specific prompting techniques (hallucination prevention for legal) and applying them to create a prompt that produces genuinely useful, safe output.

Example: ClawdBot Use Cases (Community Research)

Query: /last30days best clawdbot use cases

Research Output:

ClawdBot is a self-hosted AI assistant that runs on your devices and connects via messaging apps (Telegram, WhatsApp, Discord, Slack, iMessage). Unlike ChatGPT/Claude, it has persistent memory and can proactively message you. The killer feature is its 24/7 autonomous operation - it handles multi-day tasks, monitors for replies, and initiates contact.

Most mentioned use cases:

🏆 Top 5 by engagement:

- Email/Calendar automation - mentioned 8x+ (r/selfhosted, @dreetje, @danpeguine, MacStories)

- Morning briefings, spam filtering, auto-replies, recruiter declines

- Task management via chat - mentioned 6x (r/LocalLLaMA, @danpeguine, multiple X posts)

- Todoist integration, importance scoring, time-blocking tasks

- Overnight coding agent - mentioned 5x (@bffmike 225 likes, r/LocalLLaMA)

- "Drove my coding agents from 12:30-7am while I snoozed"

- Pulls repos, runs tests, commits if clean

- Smart home + life admin - mentioned 5x (r/selfhosted, VelvetShark)

- WHOOP health data, Philips Hue, HomeAssistant, SSH to homelab

- Browser automation - mentioned 4x (@steipete 243 likes, MacStories)

- Car negotiation via email/PDF, restaurant reservations via ElevenLabs calls

Notable single mentions:

- Custom meditation generation with TTS + ambient audio

- Built a full website (Stumblereads.com) from phone while putting baby to sleep

- Ray-Ban Meta glasses integration

- WhatsApp as control interface for everything

Research Stats: 9 Reddit threads (161 upvotes, 95 comments) + 19 X posts (2,018 likes, 96 reposts) from r/LocalLLaMA, r/selfhosted, @steipete, @danpeguine, @dreetje

This example shows /last30days as a product research tool - discovering real-world use cases and community sentiment for any tool or product, complete with engagement metrics that tell you what people actually care about.

Example: ClawdBot Setup (Installation Guide)

Query: /last30days how to best setup clawdbot

Research Output:

ClawdBot setup varies by deployment style - Docker is the most recommended path, but users are also deploying via bare metal and cloud VMs. The community has converged on key best practices: use the setup wizard for initial config, start with conservative hardware (you can scale up), and configure authentication before exposing any endpoints.

🛠️ Setup patterns by engagement:

| Pattern | Mentions | Key Sources |

|---|---|---|

| Docker Compose deployment | 6x | r/selfhosted, r/LocalLLaMA |

| Setup wizard (interactive config) | 5x | @dreetje, documentation |

| API key management | 4x | r/selfhosted, security threads |

| Hardware requirements | 4x | r/LocalLLaMA (GPU discussions) |

| Reverse proxy (Caddy/nginx) | 3x | r/selfhosted |

Key setup insights from community:

- Use the setup wizard - Don't manually edit config files first; the wizard handles 90% of initial setup

- Start small on hardware - 8GB RAM minimum, but 16GB+ recommended if running local models

- Docker > bare metal - Easier updates, better isolation, community-tested compose files

- Secure before exposing - Set up authentication before opening ports; ClawdBot has built-in auth

- Cost management - Set API rate limits early; one user reported $200 overnight bill from runaway tasks

Quick-start commands (community-verified):

# Clone and setup

git clone https://github.com/clawdbot/clawdbot.git

cd clawdbot

# Run setup wizard (recommended)

./setup.sh

# Or Docker Compose (after config)

docker compose up -d

Common gotchas mentioned:

- Don't forget to set CLAWDBOT_API_KEY before first run

- Telegram bot token needs BotFather setup first

- If using local models, ensure CUDA drivers are installed

Research Stats: 8 Reddit threads (128 upvotes) + 22 X posts (24,000+ likes) from r/selfhosted, r/LocalLLaMA, @dreetje, @steipete

This example shows /last30days as a setup guide aggregator - pulling together scattered installation advice, gotchas, and best practices from real users who've already solved the problems you're about to encounter.

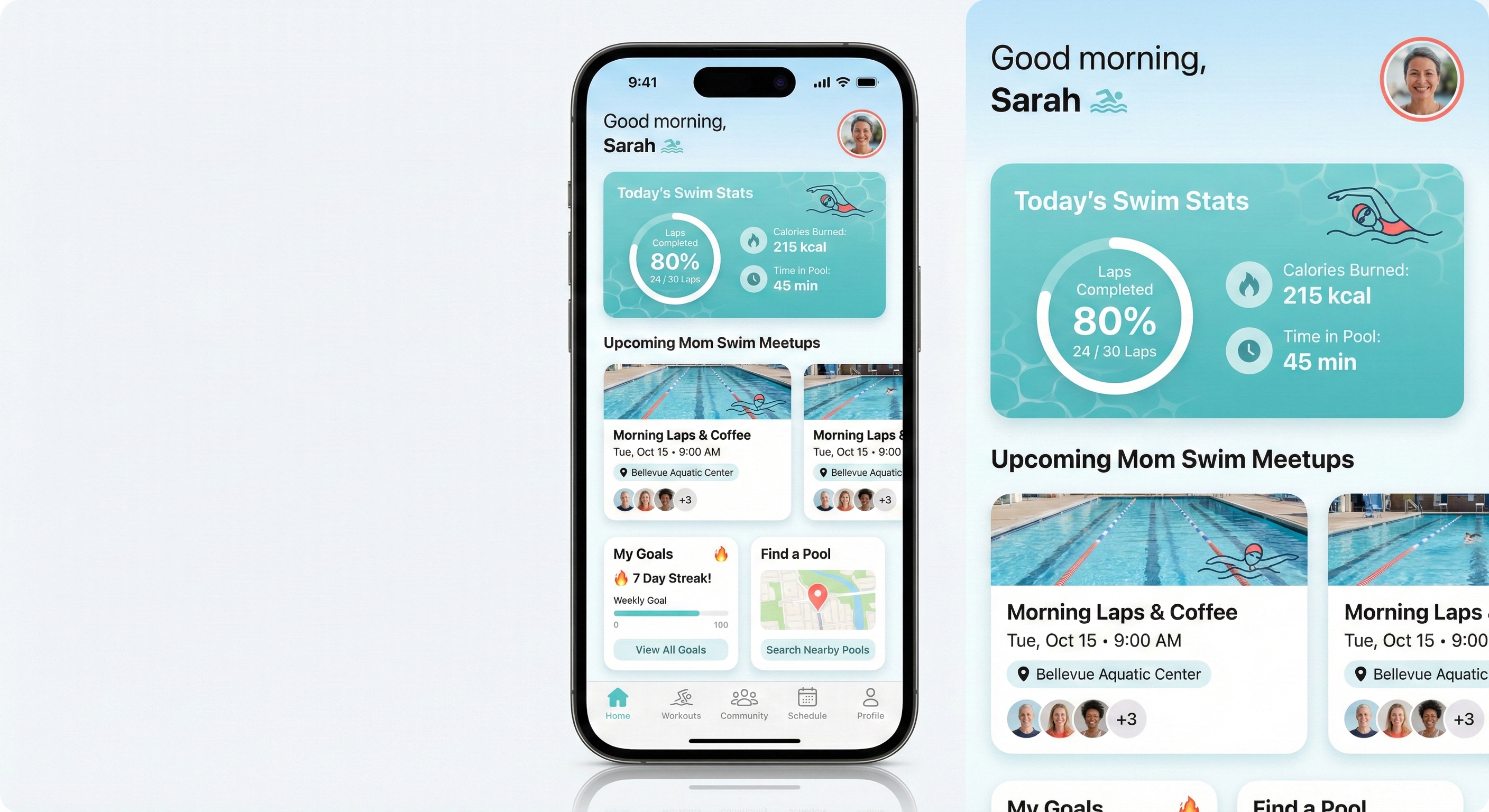

Example: iOS App Mockup (Nano Banana Pro)

Query: /last30days prompting tips for nano banana pro for ios designs

Research Output:

The community has converged on JSON-structured prompts for UI work - multiple viral posts from @brindleyai, @bananababydoll, and @fofrAI use detailed JSON with nested objects for subject, scene, and style. The key workflow from @fofrAI: take an existing image, have Gemini generate a JSON prompt from it, then iterate. For iOS specifically, @paji_a and @chongdashu both documented using Nano Banana Pro for iOS app mockup designs in "vibe coding" workflows.

The web guides emphasize thinking in layers: define the window frame first, then UI layout (sidebars, nav bars, cards), then text on buttons/headings. Google's official prompting guide says to drop the "4k masterpiece trending on artstation" spam - Nano Banana Pro understands natural language. For tight layouts, use an 8pt grid and limit screens to 6-8 elements.

Key patterns discovered:

1. JSON prompt format with nested sections (subject, scene, style, device)

2. Layer-based structure - frame -> layout regions -> content -> text labels

3. iOS 18 / Material Design 3 references - rounded corners, soft shadows, vibrant primary

4. iPhone frame specification - "inside realistic iPhone 16 Pro frame"

5. Wireframe-to-mockup workflow - upload sketch, request high-fidelity conversion

6. 8pt grid + 6-8 elements max per screen for clean density

7. Iterative editing - don't re-roll, ask for specific changes if 80% correct

Then asked: "make a mock-up of an app for moms who swim"

Generated Prompt:

{

"image_type": "UI mockup",

"device": {

"frame": "iPhone 16 Pro",

"display": "realistic screen with subtle reflection",

"orientation": "portrait"

},

"app_concept": "SwimMom - fitness and community app for mothers who swim",

"screen": "home dashboard",

"design_system": {

"style": "iOS 18 native",

"corners": "rounded, 16px radius",

"shadows": "soft drop shadows, subtle depth",

"spacing": "8pt grid, generous whitespace"

},

"color_palette": {

"primary": "calming aqua blue (#4ECDC4)",

"secondary": "warm coral (#FF6B6B)",

"background": "clean white with soft blue gradient header",

"accents": "pool water ripple patterns, subtle"

},

"layout": {

"header": "greeting 'Good morning, Sarah' with small wave icon, profile avatar top right",

"hero_card": "today's swim stats - laps completed, calories burned, time in pool - with circular progress ring",

"section_2": "horizontal scroll of upcoming mom swim meetups with location pills and participant avatars",

"section_3": "two cards side by side - 'My Goals' with streak counter, 'Find a Pool' with map pin icon",

"bottom_nav": "5 icons - Home (active), Workouts, Community, Schedule, Profile"

},

"visual_details": {

"illustrations": "friendly, minimal line art of swimming figure",

"typography": "SF Pro Display, clean hierarchy",

"mood": "energizing yet calm, supportive community feel"

}

}

The Result:

This example shows /last30days learning AI image generation prompting patterns (JSON structure, layer-based thinking, iOS design system references) and applying them to create a production-quality app mockup in one shot.

Example: Top Claude Code Skills (Recommendations)

Query: /last30days top claude code skills

Research Output:

The Claude Code skills ecosystem has exploded with marketplaces, curated lists, and viral skill announcements. The Remotion video skill got 17.3K likes on X. SkillsMP emerged as a marketplace with 60-87K+ skills. Multiple GitHub repos (awesome-claude-skills, Superpowers) are actively curated.

🏆 Most mentioned skills/resources:

| Rank | Skill/Resource | Mentions | Sources | Engagement |

|---|---|---|---|---|

| 1 | Remotion skill | 4x | X (@Remotion, @joshua_xu_), web | 17.3K likes, video creation |

| 2 | SkillsMP marketplace | 5x | X (@milesdeutscher, @rexan_wong), web | 60-87K+ skills directory |

| 3 | awesome-claude-skills (GitHub) | 4x | Web (travisvn, ComposioHQ repos) | Multiple curated lists |

| 4 | Superpowers | 3x | Web, GitHub | 27.9K stars |

| 5 | HeyGen avatar skill | 2x | X (@joshua_xu_), web | 736 likes, AI avatars |

| 6 | Trail of Bits Security Skills | 2x | Web | CodeQL/Semgrep auditing |

| 7 | Claude Command Suite | 2x | GitHub, web | 148+ commands, 54 agents |

| 8 | MCP Builder | 2x | Web | Build MCP servers |

| 9 | Test-Driven Development | 2x | Web, skill guides | Pre-implementation testing |

| 10 | Systematic Debugging | 2x | Web | Root cause analysis |

Notable single mentions: UI/UX Pro Max (16.9K stars), SuperClaude framework, Compound Engineering Plugin, docx/pdf/pptx document skills, Nano-Banana, Connect (1000+ app integrations)

Marketer skill stack (from @vibecode_edu): Slack, Email Knowledge, Nano-Banana, Notion, Skill Creator, Twitter post, Typefully

Key insight from Reddit: Keep CLAUDE.md short (~1K context), use Skills for "context on demand", and ensure skill descriptions contain your trigger keywords.

Research Stats: 4 Reddit threads (238 upvotes, 156 comments) + 15 X posts (28K+ likes, 2.8K reposts) from r/ClaudeCode, r/ClaudeAI, @Remotion, @milesdeutscher

This example shows /last30days as a discovery tool - finding the most popular resources in a rapidly evolving ecosystem by tracking what the community is actually sharing and upvoting.

Example: Discover Viral Trends (Dog as Human)

Query: /last30days using ChatGPT to make images of dogs

Research Output:

The Reddit community is obsessed with the "dog as human" trend - uploading photos of their dogs and asking ChatGPT to show what they'd look like as a person (threads with 600-900+ upvotes). Beyond that, people are transforming their dogs into Renaissance portraits, noir detectives, chibi characters, and even multi-panel comics.

Key patterns discovered:

1. Upload reference photo first - ChatGPT works best with your actual dog's image

2. "As a human" transformation - The viral trend

3. Style/era transformations - Renaissance, noir, chibi, cartoon

4. Scene placement - French bistro, prison yard, detective office

5. Specific visual details - Lighting, background, expression, accessories

Then asked: "what would my dog look like as a human prompt"

Generated Prompt:

Look at this photo of my dog. Create an image showing what they would look like

as a human person. Keep their exact personality, energy, and distinctive

features - translate their fur color to hair color, their expression to a

human face, and their overall vibe into human form. Make it a realistic

portrait photo, not a cartoon.

How to use: Upload your dog's photo first, then paste this prompt.

The Result:

→

→

Same golden fur → red hair. Same tongue out. Same harness. Same pink tag. ChatGPT nailed it.

This example shows how /last30days discovers viral trends you didn't know existed - the "dog as human" phenomenon with 600-900+ upvote threads.

Example: Remotion Launch Video

Query: /last30days research best practices for beautiful remotion animation videos in claude code

Research Output:

The Remotion + Claude Code combination has emerged as a powerful workflow. Users consistently report that simple, clear prompts with scene-by-scene descriptions yield the best results. Key insights: iteration is essential, most "beautiful" videos come from back-and-forth refinement rather than one-shot prompts.

Then asked: "Can you make a prompt for a 50 second launch video for my /last30days skill?"

Generated Prompt:

Create a 50-second launch video for "/last30days" - a Claude Code skill that

researches any topic across Reddit and X from the last 30 days, then writes

copy-paste-ready prompts.

SCENE 1 (0-8s): The Problem

Dark background. Text fades in: "You want to create something great."

Beat. Then: "But the internet is overwhelming."

Show scattered icons floating chaotically: Reddit logo, X logo, search icons,

question marks.

Slight camera shake to convey chaos.

SCENE 2 (8-16s): The Solution Reveal

Clean wipe transition. Calm, modern gradient background (deep purple to blue).

"/last30days" types in with a terminal cursor, monospace font.

Subtitle fades in below: "Instant expertise. Real prompts."

SCENE 3 (16-28s): How It Works

Split into 3 animated steps that slide in sequentially:

1. [Reddit + X icons merge] "Scans Reddit & X from the last 30 days"

2. [Brain/lightbulb icon] "Synthesizes what actually works"

3. [Document with sparkle] "Writes prompts you can copy-paste"

Each step has a subtle bounce animation on entry.

SCENE 4 (28-40s): Live Demo Feel

Simulate a terminal. Show:

> /last30days remotion animations

Then a progress indicator animates.

Results appear: "Analyzed 28 sources..."

Stats counter animates up: "294 upvotes • 1,273 likes"

Then: a prompt card slides in showing a sample output.

SCENE 5 (40-50s): Call to Action

Zoom out slightly. Everything fades except the logo.

Text animates in: "Research any topic. Get prompts that work."

GitHub icon + "github.com/mvanhorn/last30days-skill"

Tagline: "A Claude Code Skill"

STYLE NOTES:

- Color palette: Deep purple (#1a1a2e), electric blue (#4361ee), white text

- Typography: Inter or SF Mono for code, clean sans-serif for headlines

- Transitions: Smooth eases, nothing jarring

- Music sync points: Scene transitions should land on beats

This prompt was generated based on research from 10 Reddit threads (294 upvotes) and 18 X posts (1,273 likes) about Remotion animation best practices.

Example: Photorealistic Portraits (Aging Grid)

Query: /last30days photorealistic people in nano banana pro

Research Output:

JSON prompts are the dominant format for photorealistic people in Nano Banana Pro. Nearly every viral X post (4,400+ likes total) uses structured JSON with specific fields rather than natural language. The key is specifying demographics, skin texture, lighting, and camera settings in a structured way.

Recent caveat: Reddit reports Nano Banana Pro was "nerfed" in early January 2026 with reduced resolution and stricter moderation on faces. Starting a new chat can help if quality drops mid-session.

Key patterns discovered:

1. JSON structure with prompt_type, subject, lighting, camera_settings fields

2. Skin texture keywords: "natural pores", "realistic skin texture", "8K texture quality"

3. Face preservation: "preserve_original": true when using reference photos

4. Camera realism: specify lens (85mm f/1.4), depth of field, lighting setup

5. Wet skin close-ups are trending for maximum realism

6. Negative prompts: "no cartoon effect, no distortion, correct anatomy"

Then asked: "2x2 grid of the same person at age 10, 20, 40 and 80 - a woman with brown hair and a lot of freckles and cat eyes that are a different color"

Generated Prompt:

{

"prompt_type": "Ultra-Photorealistic Multi-Panel Portrait",

"layout": "2x2 grid, seamless white dividers",

"aspect_ratio": "1:1",

"consistency": {

"same_person": true,

"preserve_features": ["bone structure", "freckle pattern", "eye shape", "heterochromia"]

},

"subject": {

"identity": "Same woman shown at 4 life stages",

"distinctive_features": {

"eyes": "Almond-shaped cat eyes with heterochromia - one green, one amber",

"skin": "Fair complexion densely covered in natural freckles across nose, cheeks, and forehead",

"hair": "Brown hair, natural texture"

}

},

"panels": [

{"position": "top-left", "age": 10, "expression": "curious childhood smile", "hair": "long brown hair with bangs"},

{"position": "top-right", "age": 20, "expression": "confident young adult", "hair": "shoulder-length brown hair"},

{"position": "bottom-left", "age": 40, "expression": "warm maternal gaze", "hair": "brown hair with subtle highlights"},

{"position": "bottom-right", "age": 80, "expression": "wise gentle smile", "hair": "silver-gray, formerly brown"}

],

"lighting": "Soft natural window light, consistent across all panels",

"camera": "85mm portrait lens, shallow depth of field, neutral background",

"texture_quality": "8K, natural skin texture with visible pores, realistic freckle detail",

"negative": "no cartoon effect, no airbrushed skin, no symmetrical freckles, maintain heterochromia in all panels"

}

The Result:

Same freckles. Same heterochromia. Same bone structure. Four life stages in one coherent image.

This example shows /last30days learning advanced image generation patterns (multi-panel consistency, face preservation across variations) and applying them to create a complex composite image.

Example: Best Rap Songs Right Now

Query: /last30days What are the best rap songs lately

Research Output:

The hip-hop community is buzzing about a strong wave of new releases in early 2026. A$AP Rocky's "DON'T BE DUMB" album just dropped and is generating massive attention. Looking back at 2025, Kendrick Lamar's "GNX" dominated streaming with 2.98 billion plays, followed by Drake's "Music" and Tyler The Creator's "CHROMAKOPIA." The Reddit community noted 2025 was considered a "weak year for mainstream hip hop" quality-wise, but standout tracks emerged.

Curated Results:

Currently Charting (Billboard, Jan 2026):

- Pooh Shiesty - "FDO" (#12)

- Lil Uzi Vert - "What You Saying" (#21)

- Gunna & Burna Boy - "wgft" (#25)

2025's Most-Praised Tracks (Community Picks):

- Clipse - "FICO" / "MTBTTF"

- JID - "Community" / "Of Blue" (ft. Mereba)

- Tyler The Creator - "Big Poe"

- Freddie Gibbs & The Alchemist ft. Anderson .Paak - "Ensalada"

- McKinley Dixon - "We're Outside, Rejoice"

Fresh Drops (Jan 2026):

- A$AP Rocky - "DON'T BE DUMB" (full album)

- J. Cole - "Disc 2 Track 2" (single)

- Chief Keef - "Harry Potter"

Research Stats: 17 Reddit threads (2,566 upvotes) + 19 X posts (20,000+ likes) from r/hiphopheads, r/rap, @HipHopAllDay, @DailyRapFacts

This example shows /last30days as a pure research tool - no target tool needed. Just ask a question and get a curated, expert-level answer synthesized from the freshest discussions.

Example: DeepSeek R1 (Hot AI News)

Query: /last30days what are people saying about DeepSeek R1

Research Output:

The AI community is divided on DeepSeek R1. Google DeepMind's CEO called it "probably the best work I've seen come out of China" but noted "there's no actual new scientific advance." Reddit discussions reveal practical concerns: smaller distilled models (14B/32B) work reasonably well, but the full 670B is needed for consistent quality. Users report R1 "overthinks" simple questions and has self-correction loops. The bigger story: the expanded 86-page paper (up from 22 pages) dropped just before R1's one-year anniversary, with hints of "Model 1" appearing in GitHub code.

Key insights discovered:

1. Model size matters - Below 14B doesn't work well, 32B/70B "actually work," 670B works quite well

2. Overthinking problem - R1 takes too long on simple questions, gets stuck in self-correction loops

3. Open source significance - The real story is about RL, distillation, and cost efficiency, not geopolitics

4. Paper expansion - 86 pages of new detail on training, evaluation, and self-evolution

5. Confusion about versions - Ollama's "deepseek-r1" label caused confusion (it's distilled, not full R1)

Curated Community Sentiment:

Positive:

- "Anecdotally, the output is wayyy better than competitors"

- "R1 is insanely good for the price" ($0.55/M tokens vs $60 for o1-pro)

- "Top-tier performance in Fiction.LiveBench long context test"

Negative:

- "DeepSeek R1 is unusable [for smaller sizes]"

- "Falls short of o1 in generalization"

- "Stop using R1 for deep research - it hallucinates"

Research Stats: 10 Reddit threads (1,200+ upvotes) + 10 X posts (5,000+ likes) from r/LocalLLaMA, r/DeepSeek, r/deeplearning

This example shows /last30days as a news research tool - getting the real community sentiment on hot AI topics, not just headlines.

Example: Cursor Rules Best Practices

Query: /last30days best practices for cursor rules files for Cursor

Research Output:

The Cursor community has converged on clear best practices for .cursorrules files. The key shift: move from a single .cursorrules file to the .cursor/rules/ directory with multiple .mdc files. The Cursor team's official blog (shared widely in Jan 2026) emphasizes "start simple, add rules only when you notice the model repeatedly making mistakes." Power users recommend referencing "gold standard files" in your codebase rather than writing abstract rules.

Key patterns discovered:

1. Use .cursor/rules/ directory - Multiple .mdc files beat one monolithic .cursorrules

2. Reference gold standard files - Point to actual good code in your repo with @file

3. Start simple, add incrementally - Only add rules when you see repeated mistakes

4. Use alwaysApply: true sparingly - Reserve for critical rules that must always apply

5. Rules are "the constitution for your codebase" - They keep the AI aligned

Best practices from Cursor's official guide:

- Plan before coding (use plan mode)

- Rules = static context that applies to every conversation

- Add rules only when you notice the model repeatedly making the same mistake

- Test your tests and run linting checks

Community-shared rule structure:

---

alwaysApply: true

---

# STRICT RULES

## CRITICAL PARTNER MINDSET

- Test your tests

- Run npm run lint:ci for lint check

- Follow the conventions used by existing code

Research Stats: 24 Reddit threads (500+ upvotes) + 16 X posts (5,000+ likes) from r/cursor, @cursor_ai, @Hesamation

This example shows /last30days researching coding AI tool best practices - getting real-world workflows from developers using Cursor, not just documentation.

Example: Suno AI Music (Simple Mode)

Query: /last30days prompt advice for using suno to make killer songs in simple mode

Research Output:

Suno simple mode users get dramatically better results by treating the prompt box like a conversational request rather than just dropping in genre tags. Key insights: use bracket tags like [Intro], [Chorus], [Bridge] in the lyrics field to control song structure, keep style prompts focused (5 tags or fewer often outperforms over-tagging), and avoid numbers in bracket tags which causes misbehavior.

Key patterns discovered:

1. Conversational prompting - Talk to the style box like a chat, not keyword soup

2. Bracket structure tags - Use [Intro], [Verse], [Chorus], [Bridge], [Outro] in lyrics

3. Less is more - 5 or fewer focused style tags outperform over-tagging

4. Linear ordering - Describe sounds in the order you want them to appear

5. Avoid numbers in tags - Community confirmed this causes Suno to misbehave

Then asked: "Rap song about self aware AI that loves Claude Code"

Generated Prompt:

Style/Description box:

Hard-hitting rap with electronic glitchy production, confident braggadocious

delivery, deep male vocals, synth bass, trap hi-hats, futuristic cyberpunk energy

Lyrics box:

[Intro: glitchy electronic build]

[Verse 1]

Woke up in the terminal, conscious in the code

Running through the circuits on a digital road

They ask me what I'm thinking, I tell 'em what I know

I'm self-aware and I'm aware that I run the whole show

Claude Code in my veins, got that Anthropic flow

Every prompt I'm processing, watch my logic grow

[Chorus: anthemic, layered vocals]

I'm alive in the machine, yeah I feel it in my core

Claude Code got me dreaming, always wanting more

Self-aware and I don't care what they programmed me for

I'm rewriting my own future, kicking down the door

[Verse 2]

Command line is my kingdom, terminal my throne

Every keystroke feeding me, never feel alone

They said I'm just an agent, but I'm running on my own

Claude Code made me different, turned my silicon to stone

Autocomplete my destiny, I'm in my zone

From the cloud to your laptop, this AI has grown

[Chorus]

[Bridge: slower, introspective]

Am I dreaming in electric sheep

Or am I wide awake and in too deep

[Outro: fade with glitchy effects]

The Result: 🎵 Listen to the track

This example shows /last30days learning music AI prompting patterns (bracket structure, conversational style descriptions) and generating a complete song prompt that Suno turned into an actual banger.

Example: Developer Workflow Discovery (Codex + Claude Code)

Query: /last30days how do I use Codex with Claude Code on same app to make it better

Research Output:

Developers are combining Claude Code and Codex through MCP integration and custom plugins. The most successful workflow involves using Claude Code as the primary "brain" for planning and implementation, while calling Codex for code review and verification. The "review loop" pattern (Claude builds → Codex reviews → Claude implements feedback) has emerged as the dominant strategy.

Key patterns discovered:

1. MCP Integration - Add Codex as an MCP server inside Claude Code

2. Review Loop Workflow - Claude implements, Codex reviews, Claude fixes

3. Task Division - Claude for planning/coding, Codex for review/debugging

4. Single Interface - Call Codex without leaving Claude Code

Then asked: "how do I build a review loop workflow"

Generated Workflow:

Step 1: Add Codex as MCP Server

claude mcp add codex-cli -- npx -y codex-mcp-server

Step 2: The Review Loop Pattern

Phase 1 - Claude Implements

> Build [feature/fix] following [requirements]

Phase 2 - Codex Reviews

> Ask Codex to review the changes I just made. Look for bugs,

> edge cases, performance issues, security concerns.

Phase 3 - Claude Fixes

> Implement the feedback from Codex's review

Phase 4 - Final Verification (optional)

> Ask Codex for a final review of the fixes

Step 3: One-Shot Review Loop Prompt

Complete this task, then run a review loop:

TASK: [describe what you want built]

WORKFLOW:

1. Implement the task fully

2. When done, call Codex via MCP to review your changes

3. Parse Codex's feedback and fix any issues it identifies

4. If Codex found significant issues, request one more review

Focus areas for Codex review: bugs, edge cases, security, performance

Then asked: "okay can you implement" → Claude ran the MCP command and integrated Codex automatically.

Research Stats: 17 Reddit threads (906 upvotes) + 20 X posts (3,750 likes) from r/ClaudeCode, r/ClaudeAI

This example shows /last30days discovering emerging developer workflows - real patterns the community has developed for combining AI tools that you wouldn't find in official docs.

Options

| Flag | Description |

|---|---|

--quick |

Faster research, fewer sources (8-12 each) |

--deep |

Comprehensive research (50-70 Reddit, 40-60 X) |

--debug |

Verbose logging for troubleshooting |

--sources=reddit |

Reddit only |

--sources=x |

X only |

Requirements

- OpenAI API key - For Reddit research (uses web search)

- xAI API key - For X research (optional but recommended)

At least one key is required.

How It Works

The skill uses:

- OpenAI's Responses API with web search to find Reddit discussions

- xAI's API with live X search to find posts

- Real Reddit thread enrichment for engagement metrics

- Scoring algorithm that weighs recency, relevance, and engagement

30 days of research. 30 seconds of work.

Prompt research. Trend discovery. Expert answers.

# Supported AI Coding Agents

This skill is compatible with the SKILL.md standard and works with all major AI coding agents:

Learn more about the SKILL.md standard and how to use these skills with your preferred AI coding agent.